Market Demand

The enterprise AI landscape faces a critical paradox: as language models become increasingly sophisticated, their deployment in regulated industries remains constrained by fundamental questions of reliability, consistency, and auditability.

Organizations investing in AI transformation encounter persistent challenges:

Regulatory Pressure Intensifies - Financial services, healthcare, and legal sectors demand explainable AI architectures - Emerging EU AI Act and similar frameworks require documented model behavior - Compliance officers need quantifiable metrics, not qualitative assurances

Performance Uncertainty Persists - Identical prompts yield inconsistent outputs across inference runs - Model behavior varies unpredictably with minor input modifications - Traditional testing frameworks fail to capture reasoning stability

Investment Risk Remains Opaque - Organizations struggle to validate model selection decisions - ROI calculations lack foundation in systematic performance data - Competitive differentiation requires deeper understanding than vendor benchmarks provide

The market demands a framework that transforms AI’s inherent variability from liability into strategic intelligence.

Core Technology

TEREX introduces a revolutionary approach to reasoning assessment, grounded in established statistical methodologies adapted for the unique characteristics of large language models.

Statistical Foundation

Where others see inconsistency as a flaw, we recognize it as a measurement opportunity. TEREX systematically explores the reasoning landscape through:

- Controlled Input Variation: Sophisticated prompt engineering generates semantically equivalent queries, mapping how models respond to paraphrase, rewrite, and contextual modification

- Systematic Output Analysis: Advanced embedding techniques capture semantic variance across response distributions

- Robustness Quantification: Mathematical frameworks borrowed from reliability engineering establish confidence intervals for model behavior

Architectural Innovation

The framework operates across three integrated layers:

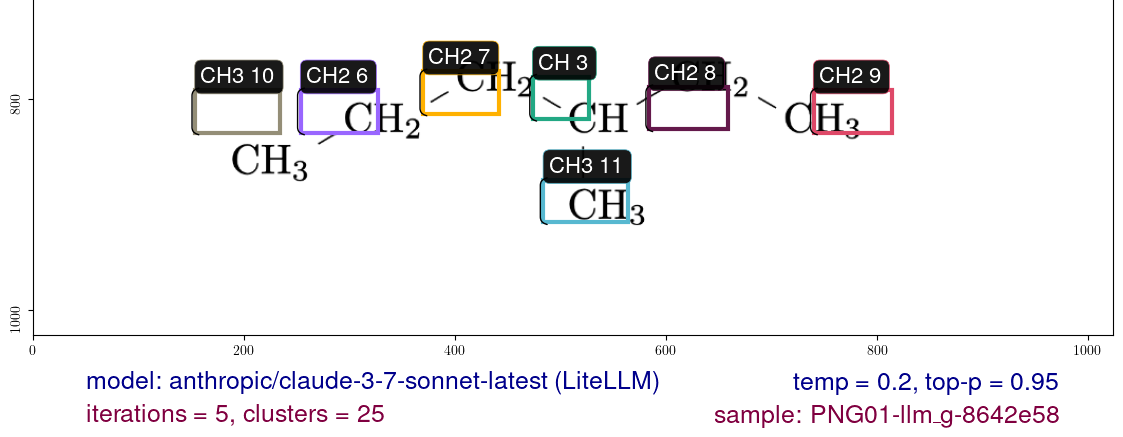

Exploration Layer - Cross-provider compatibility via LiteLLM foundation - Automated generation of prompt variations maintaining semantic equivalence - Parameter space mapping across temperature, top-p, and sampling strategies

Analysis Layer - State-of-the-art embedding models (MTEB leaderboard validated) - Clustering algorithms revealing semantic response patterns - Statistical metrics quantifying stability and rare-event detection

Visualization Layer - Revolutionary 3D semantic tree representations - Interactive exploration of reasoning pathways - Intuitive interfaces for domain experts without statistical backgrounds

Solution Architecture

TEREX represents more than incremental improvement—it establishes an entirely new category of AI governance tooling.

Unique Value Proposition

Traditional benchmarks evaluate models at single points. TEREX maps entire reasoning landscapes.

The framework delivers:

For Compliance Officers - Auditable documentation of model behavior across operational scenarios - Quantified risk metrics aligned with regulatory requirements - Transparent decision factors supporting explainable AI mandates

For Technical Teams - Algorithmic prompt optimization guided by statistical evidence - Domain-specific detection of both profound reasoning and hallucination patterns - Systematic validation of agentic reasoning architectures

For Strategic Decision-Makers - Data-driven model selection replacing vendor marketing claims - Competitive intelligence through comparative reasoning analysis - Investment protection via continuous performance monitoring

Strategic Positioning

The architecture’s modular design enables progressive deployment:

Phase 1: Assessment Organizations begin with standalone evaluation of candidate models, establishing baseline performance metrics and identifying optimization opportunities.

Phase 2: Integration TEREX connects to existing MLOps pipelines, providing continuous monitoring as models evolve and operational contexts shift.

Phase 3: Optimization Advanced users leverage the framework’s insights to guide fine-tuning strategies, prompt engineering refinement, and architectural decisions.

Investment Rationale

Early adopters gain asymmetric advantages:

The framework’s mathematical foundation ensures longevity beyond current model generations. As reasoning capabilities advance, TEREX’s statistical approach scales naturally—more sophisticated models simply reveal richer landscapes to explore.

Organizations establishing TEREX-based governance frameworks today position themselves as natural authorities when regulatory requirements crystallize. The documentation and metrics generated become institutional knowledge, defensible in audits and valuable in competitive positioning.

Perhaps most significantly, the framework transforms AI deployment from art to engineering. Decisions grounded in systematic evidence compound over time, creating organizational capabilities that competitors cannot easily replicate.

TEREX: Where Statistical Rigor Meets Strategic Foresight

Transforming reasoning uncertainty into competitive intelligence.