Market Demand

The exponential growth of enterprise data presents a fundamental challenge: extracting actionable intelligence from vast, unstructured information repositories. Organizations struggle with documents spanning hundreds of pages, codebases containing millions of lines, and knowledge bases that exceed traditional processing capabilities.

Current approaches fragment context, losing critical relationships between information elements. This fragmentation creates blind spots in decision-making, compliance gaps in regulatory documentation, and inefficiencies in knowledge work. The market demands solutions that preserve semantic coherence across extended contexts while maintaining computational efficiency.

Swiss enterprises particularly face this challenge in regulated industries—financial services, pharmaceuticals, legal—where comprehensive document understanding directly impacts compliance, risk management, and competitive advantage. The ability to reason across entire document collections, not merely retrieve isolated fragments, represents the next frontier in intelligent information management.

Core Technology

Our approach fundamentally reimagines context management through intelligent document decomposition and orchestrated information synthesis.

Rather than treating long documents as monolithic entities or arbitrarily segmented chunks, we implement hierarchical summarization architectures where each segment contains both local detail and global context. This ensures that no query operates in isolation from the document’s broader semantic structure.

We deploy multi-perspective summarization that adapts to query characteristics, generating different contextual representations based on anticipated information needs. This dynamic approach surpasses static vectorization methods that lose nuance in dimensional reduction.

Our orchestrated query distribution transforms single-point retrieval into systematic exploration across information landscapes. By posing queries hundreds of times across intelligently structured segments, we construct robust understanding that captures both central themes and edge cases—the statistical foundation for reliable reasoning.

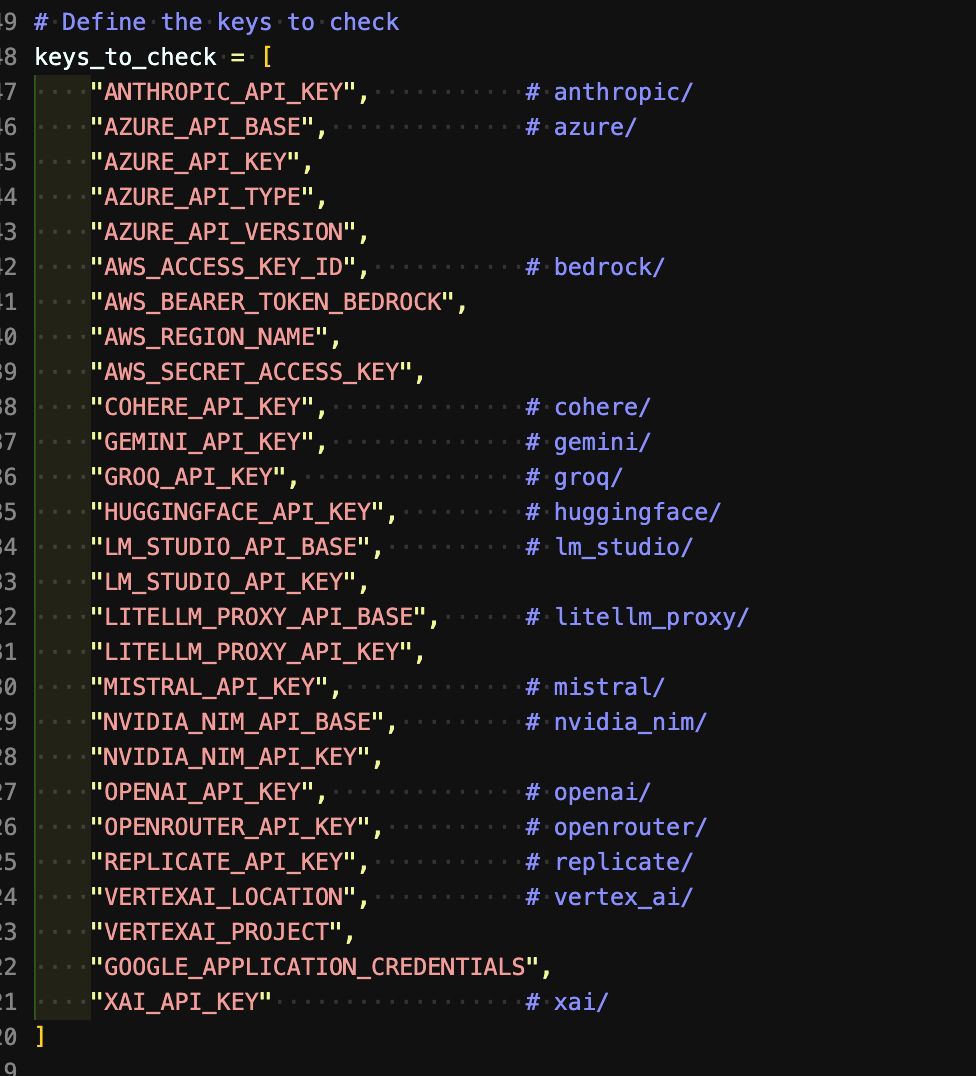

The architecture integrates seamlessly with both traditional RAG pipelines and emerging concept-level embeddings (inspired by Meta’s Large Concept Models), operating beyond token-level representations to capture semantic relationships at the sentence, paragraph, and document levels.

Solution Architecture

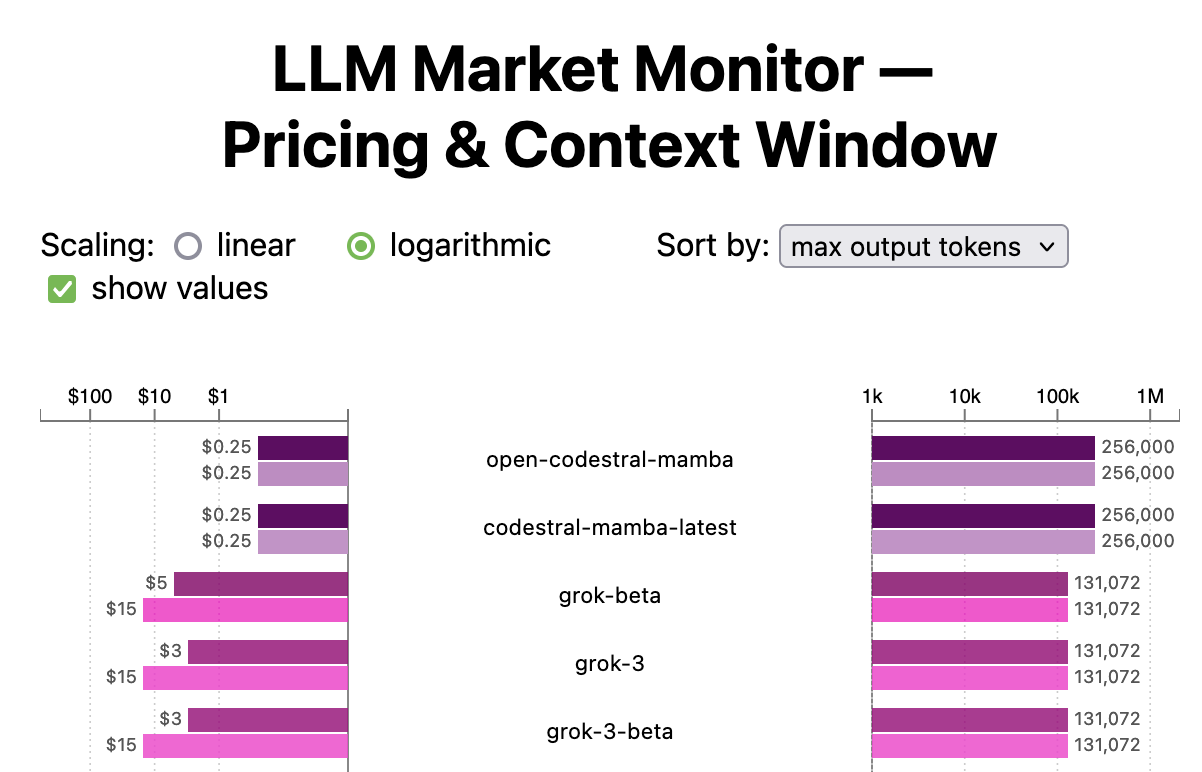

Our implementation follows a three-tier strategy optimized for cost-efficiency and performance:

Tier 1: Strategic Architecture Events

Frontier models (GPT-4, Claude) deployed selectively for high-level architectural decisions and comprehensive codebase analysis in large-scale projects. Reserved for critical junctures where holistic understanding justifies premium computational costs.

Tier 2: Operational Development

Flat-rate coding assistants (GitHub Copilot) serve as the primary development interface, providing continuous support for routine coding tasks, refactoring, and incremental development. This tier balances capability with economic sustainability for daily operations.

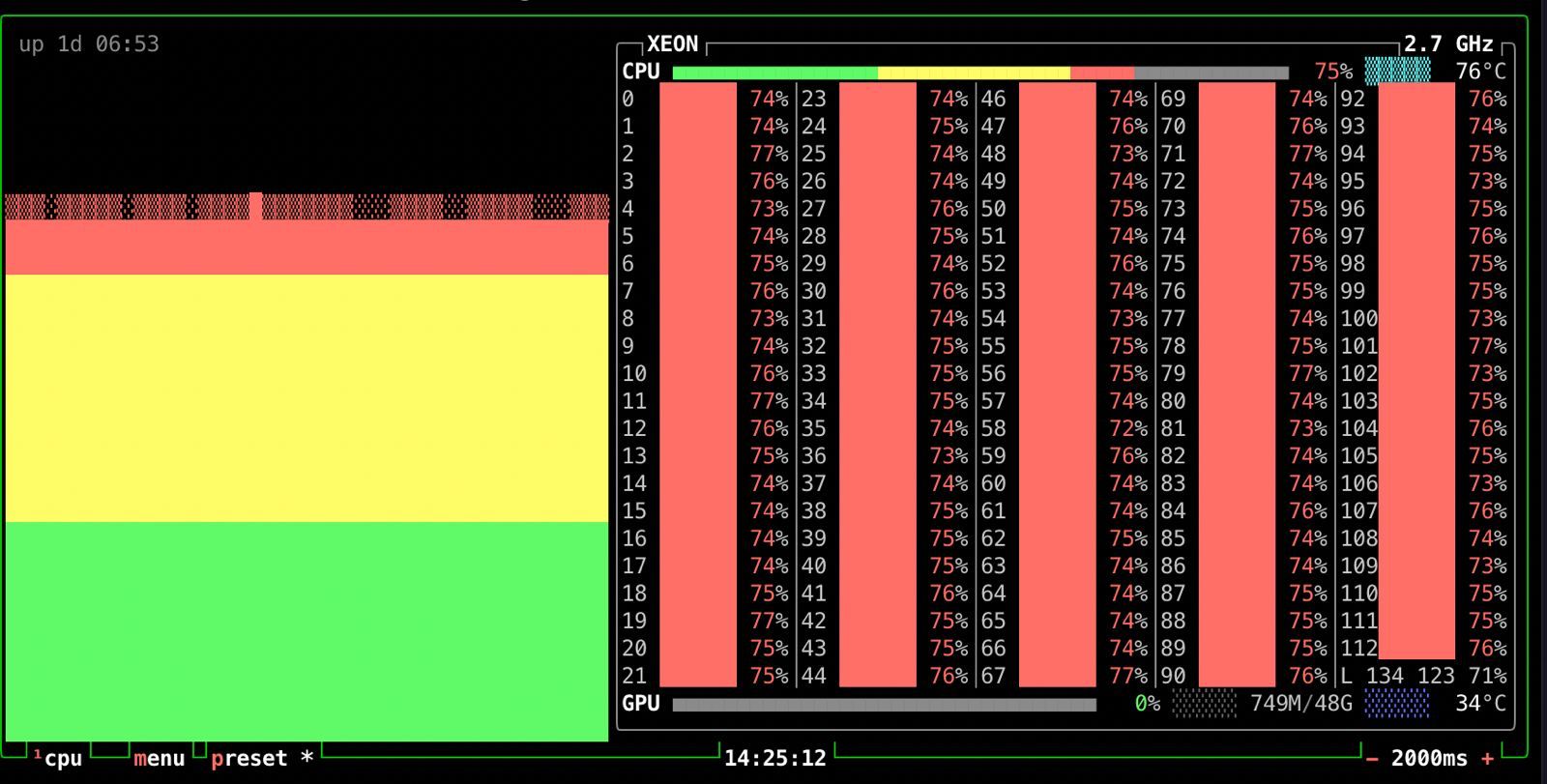

Tier 3: Extended Context Processing

Local LLMs with 500K-1M token contexts handle frequent large-scale operations—standard refactoring, comprehensive code reviews, extensive documentation analysis. Enhanced with RAG tooling and optimized search strategies, this tier provides cost-effective processing for extended contexts.

This tiered approach reflects our philosophy: intelligent routing precedes intelligent reasoning. By preprocessing and strategically directing queries, we optimize both performance and economics across the entire information management pipeline.

Unique Technology Attributes

Context Preservation Through Hierarchical Intelligence

Unlike conventional chunking that severs semantic relationships, our multi-level summarization maintains global coherence within local segments. Each information unit carries awareness of its position within the broader knowledge structure.

Adaptive Information Representation

Our multi-criteria summarization dynamically adjusts to query characteristics, generating contextual representations optimized for specific information needs rather than generic embeddings that average away nuance.

Statistical Robustness Through Orchestration

Repeated query distribution across structured segments transforms stochastic LLM outputs into statistically robust insights. This approach, foundational to our TEREX framework, reveals both consensus patterns and rare-event reasoning that single-pass retrieval misses entirely.

Cost-Optimized Routing Intelligence

Twelve years of Microsoft’s evolution from Bing Code Search to GitHub Copilot demonstrates the critical importance of preprocessing and intelligent routing. We implement this lesson systematically, ensuring computational resources align with task requirements.

Regulatory-Ready Transparency

Our segmentation and orchestration strategies create natural audit trails. Each reasoning step, each information synthesis, each context boundary becomes documentable—transforming context management from black-box retrieval into explainable information architecture.

Seamless Integration Across Paradigms

Whether deployed with traditional RAG, concept-level embeddings, or hybrid approaches, our framework adapts to existing infrastructure while elevating performance. This compatibility accelerates adoption without requiring wholesale architectural replacement.

Transforming context limitations into competitive advantages through intelligent orchestration and strategic information architecture.